Understanding the Roles of Strategic and Generative AI in Retail Price Optimization

With all the fanfare and media attention focused on generative AI, businesses are hungry to adopt cutting-edge AI technologies that promise to give them an advantage. But amid the excitement, there is also much confusion – hasn’t AI already been around for years or even decades? What’s different now?

The confusion stems from the fact that AI is not a single, precisely defined technology. For many people, it’s hard to separate what’s new in the world of AI, versus what is already an established use case of the technology. For the sake of this blog post, instead of trying to define AI generally, I believe it’s helpful to view AI through the lens of two distinct types: generative AI and strategic AI.

The goal of generative AI is to produce complex responses like text, code, images, sounds, and more to human requests. It does this by leveraging foundation models trained on massive amounts of data. By learning to predict some parts of the data from other parts, foundation models seem to understand the underlying structure and abstractions in the data – going so far as to produce outputs beyond those that they were originally trained to do. However, evaluating generative AI systems can be tricky because the quality of their responses is often subjective, requiring substantial human assessment.

The goal of strategic AI is to make decisions that optimally achieve some measurable objective. For example, autonomous vehicles make acceleration and steering decisions that get the driver to a destination in a reasonable amount of time while avoiding collisions. While generative AI has only more recently reached industrial-grade levels of usefulness, strategic AI has been around for a long time. The earliest fanfare around AI I can remember was when Deep Blue, a chess-playing expert system run on a supercomputer, beat grand champion Garry Kasparov. Since then, we have seen AI systems that can surpass humans in games like Atari, Go, StarCraft, and poker, as well as real-life challenges like piloting jet fighters.

But most strategic AI applications have been in industry, where it has been used to optimize energy grids, automate manufacturing, trade financial securities, target online ads, detect fraud, operate clinical trials, and, of course, price retail goods. Since retail AI is a subject I know and love, in this article I discuss how the reinforcement learning (RL) framework behind strategic AI helps automate some of the most important decisions in retail.

Strategic AI and Reinforcement Learning

The RL framework is useful for describing a wide range of objectives. Consider the following elements within a specific RL objective:

- Agent: the decision-maker, the strategic AI itself, capable of executing various actions

- Environment: the external world that the agent observes and interacts with

- Transition system: the rules governing how agents’ actions and the environment affect themselves and each other over time

- Reward function: some objective reward the agent tries to maximize based on its state and environment and the actions it takes

Let’s look at these elements across two vastly different use cases: autonomous driving and retail price optimization.

|

RL element |

Autonomous driving |

Retail price optimization |

|

Agent |

Steering, pedals, gear shift, etc. |

Price of each product (by channel) |

|

Environment |

Roads, other cars, pedestrians, traffic signals, lighting, weather |

Historical demand, competitor actions, supply chain, time of year |

|

Transition system |

Forces of physics governing car maneuverability |

Economic forces (e.g., price elasticity) |

|

Reward function |

Timely arrival at destination, no damage or injury, adherence to law |

Profitability, market share, brand image, adherence to various rules |

One of the benefits of the RL framework is that it allows any strategic AI objective to be reduced to a simpler abstraction so that its challenges can be compared to those of other objectives and the pros and cons of various solutions can be weighed methodically. Let’s take retail price optimization and demonstrate how we would assess the challenges of building an AI solution.

Agent. The size of the decision space available to retailers scales with the number of products, stores, channels, and customer segments. This is complicated by interaction effects between products and channels, as well as promotions, inventory, placement, etc. A more complex decision space requires more sophisticated decision intelligence – consider, for example, how much harder it is to learn chess than tic-tac-toe.

Environment. Complexity of the environment comes from the number of features as well as their observability – how knowable their true state is. At any point in time, it is often impossible to know all the factors affecting the retail environment. Competitor prices and promotions, external events affecting demand, even internal factors like inventory and advertising might not have accurate historical data.

Transition system. How do prices, promotions, seasonality, events, trends, etc. affect consumer demand? One could dedicate their career to studying these complex relationships, using advanced statistical and econometric models to understand how these variables influence purchasing decisions.

Reward function. Difficulties in the RL reward function typically come from the sparsity of relevant data – e.g., having to wait or take several actions before the reward can be calculated. Financial impacts generally appear quickly after retail actions are taken, but metrics like brand image and customer loyalty can be more elusive and might instead be approximated by proxy.

Training Data

To achieve high performance, AI requires high-quantity, high-quality, and diverse data. This is where the retail domain gets uniquely challenging.

Quantity. The huge quantity of transactional data available to retailers can be misleading. The actual amount of useful data is much smaller if aggregating to a weekly or higher level. And data older than a few years is much less relevant due to evolving consumer preferences, changing competitive landscape, etc. Furthermore, the size of data should be assessed in terms of “rows” relative to “columns” – for example, AI can learn more accurately from 100 weeks of data for one product than one week of data for 100 products.

Quality. For data to be of high quality, ideally all relevant historical information should be available and correct, which is often not the case for many retailers. This can cause inaccuracies in RL systems if not handled intelligently.

Diversity. To learn how an environment evolves over time and how an agent’s actions affect it, it is important to train on a wide variety of observations. This is where the retail domain really struggles compared to other RL domains. Some of the most celebrated achievements in RL, such as Deep-Q Networks and AlphaGo, trained on millions of data points, actively exploring previously unseen states and simulating endless alternative playthroughs. Retailers do not have this luxury!

Retail Is Complex. Domain-Specific AI Solutions Are Required.

Retail’s unique challenges call for domain-specific AI solutions. Luckily, RL has been extensively studied enough to give some useful direction.

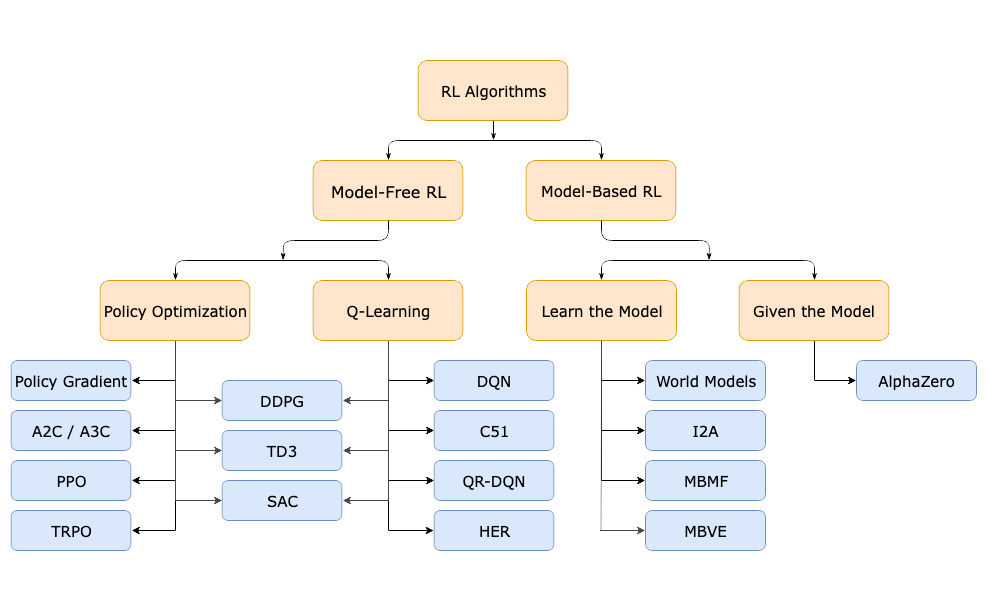

The main division in RL algorithms is between model-free and model-based designs. I like to use the analogy of instinct versus reasoning. A model-free RL agent learns to associate input from the environment with optimal actions, like a Pavlovian dog learning to instinctively salivate when hearing a bell. A model-based RL agent learns to predict how the environment will evolve and can then reason and plan accordingly.

There are pros and cons to both approaches, but the main one we care about in retail is “sample efficiency” – the ability to accurately generalize from sparse data. Model-based methods are known to be more sample-efficient, which is the reason that AI solutions for retail tend to revolve around demand modeling. If the models can accurately forecast the effects of hypothetical decisions, they can be used for a variety of downstream tasks such as pricing, promotion, markdown, and supply chain optimization.

The Future: Integrating Strategic and Generative AI

One exciting development is the integration of strategic and generative AI, with ongoing research to combine RL and foundation models. For example, SIMA, an agent from Google DeepMind, can carry out human instructions within various virtual environments. Likewise, integrating large language models into a strategic AI solution for retailers could introduce greater and more varied capabilities to carry out high-level tasks.

For example, a human could be able to instruct the AI to “show me a combination of pricing and promotions strategies that increases market share in category X by 10% while maintaining profit at its current level” or “build me a promotion on 10 products out of these 100 candidates so that they maximize overall profitability without cannibalizing each other.” Such capabilities would empower human experts through greater transparency and automation.

At Revionics, we’re committed to staying on the cutting edge of new and emerging technologies. If you would like to discuss some of the concepts covered in this blog post, feel free to reach out to me on LinkedIn.

Alex Braylan is Revionics’ Senior Manager, Data Science and AI. He is based in Austin, Texas and is currently working towards his PhD in Computer Science focused on AI and human computation.